Google Assistant’s ‘Sound Search’ feature now recognizes what music is playing faster with an updated neural network design.

The search giant says it quadrupled the size of its neural network and altered how the algorithm identifies a song to make it more like ‘Now Playing.’

Now Playing was released by a Google AI team in 2017 alongside the company’s Pixel 2 phones. It’s a low-power, offline, always-on music recognition system that indexes small slices of songs as an identifier for the whole track. When the team made Now Playing they didn’t expect it to perform as well as it did, so now they’re retooling Sound Search’s online music recognition system with the updated technology.

To make this software small enough to run in the background the team developed a new system using convolutional neural networks that turns a section of music into a digital fingerprint for the whole song. Google stores these musical fingerprints in its servers, so users have to be online to take advantage of it.

Now Playing compares music fingerprints to 10,000 or more relevant song fingerprints that are stored on-device. For the new version of Sound Search, there are 10s of millions of song fingerprints in the cloud.

There are a few changes between the two music recognition systems. The online version has a larger neural network since its less constrained by hardware, and it also doesn’t have to compress the music as much. Therefore the company can add more identification tags per song so that they can be identified more quickly.

The team wants to improve the features ability to work in noisy environments and if they can, make it work even faster as well.

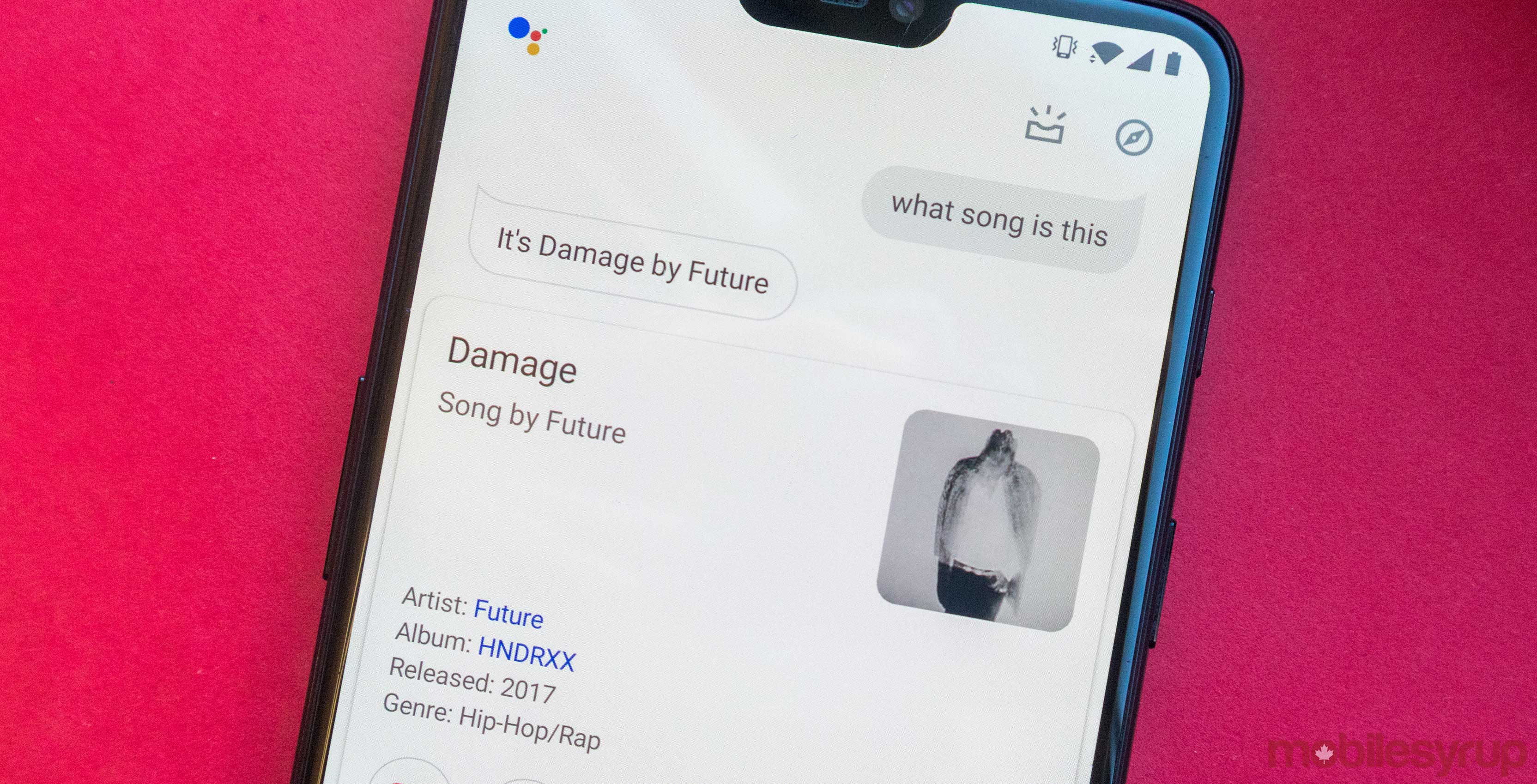

It’s worth noting that when I tested the feature with the Google Search bar on the home screen of a OnePlus 6 it said the feature wasn’t available in my country, but when I tested it in the Google app it worked.

Source: Google

MobileSyrup may earn a commission from purchases made via our links, which helps fund the journalism we provide free on our website. These links do not influence our editorial content. Support us here.