Apple has pulled back the curtain on a handful of new accessibility features ahead of WWDC.

The tech company hasn’t shared when these are going to be added to iOS or iPadOS, but since we’re so close to WWDC it stands to reason that these are likely going to be part of iOS 18 in the fall.

Eye tracking for iPad and iPhone

Using the existing front-facing cameras in iPhones and iPads, Apple says it can track your eyes and use them as a functional cursor. You can combine this with Apple’s ‘Dwell Controls’ so whenever you hold your gaze on an item it can select it as well.

This feature is incredibly cool and similar to how navigating the OS is on the Apple Vision Pro headset. In a press release, Apple says it will work on existing Apple products with no extra hardware needed, but this likely means only devices that can support iOS and iPadOS 18. This will still be a ton of phones and tablets, but not every single one.

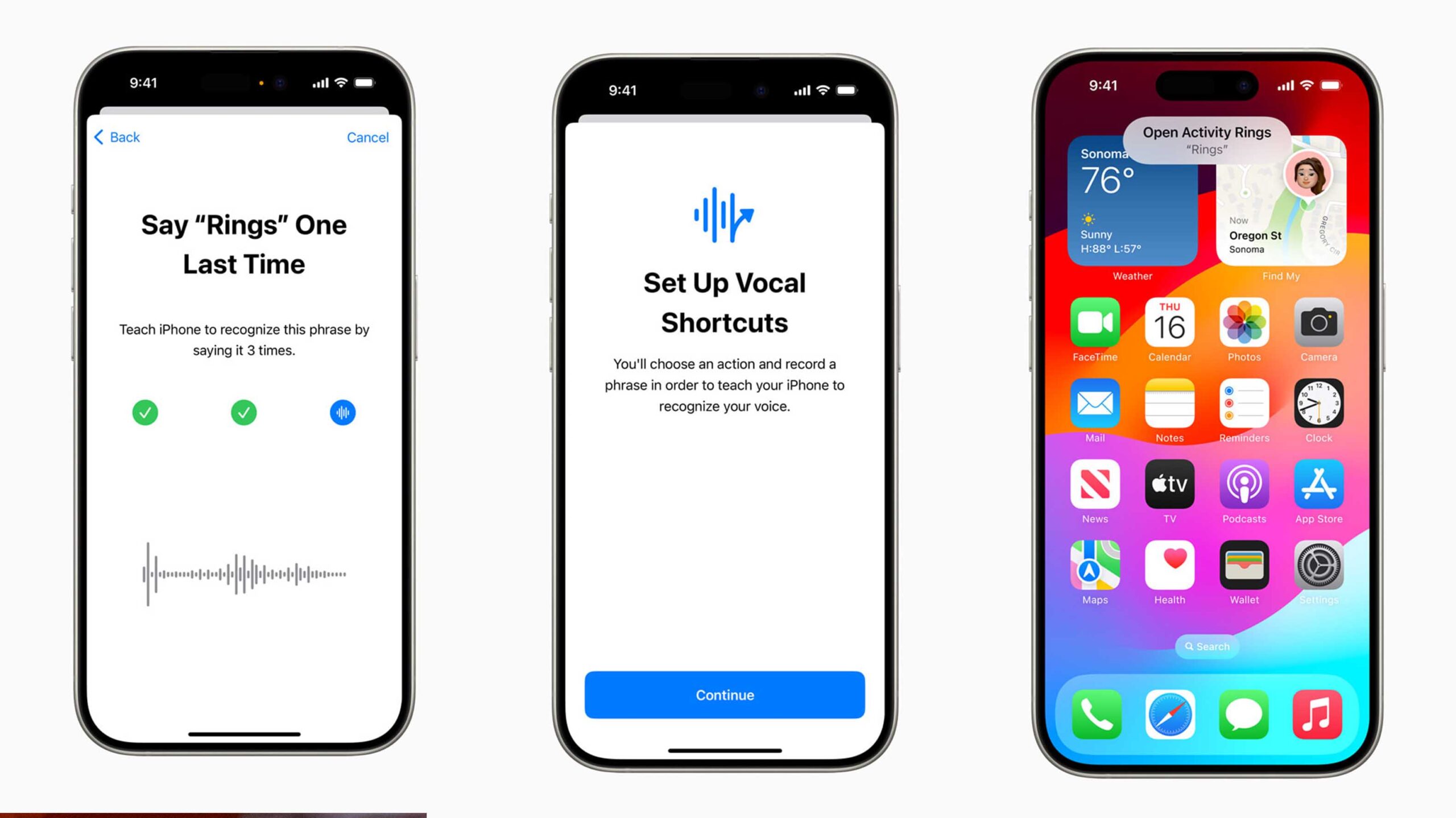

Vocal Shortcuts

iPhone power users will be excited about this next feature since it allows you to trigger Shortcuts with your voice. By mapping a shortcut to a keyword in iOS 18 you’ll be able to launch your favourite iOS/iPadOS tools really seamlessly. This likely opens the door to being able to say things like “Hey Chat-GPT” to launch OpenAI’s voice assistant.

iPhone power users will be excited about this next feature since it allows you to trigger Shortcuts with your voice. By mapping a shortcut to a keyword in iOS 18 you’ll be able to launch your favourite iOS/iPadOS tools really seamlessly. This likely opens the door to being able to say things like “Hey Chat-GPT” to launch OpenAI’s voice assistant.

To take this further Apple is also using AI machine learning to help its devices better understand people with atypical speaking patterns. In the press release, the company mentions that this should help people with ALS or cerebral palsy better interact with voice features on Apple hardware.

A cure for screen-induced motion sickness

This is a feature I’m very excited to have my partner test. She’s never been great with using her phone on long drives and it will be interesting to see how this new feature works.

The idea behind it is that an array of black dots at the edge of your screen will flow with the movement of the car to help offset the disconnect people feel when they’re moving, but their screen is static.

Other cool updates

Apple is adding a virtual trackpad to Assistive Touch. This will allow users to designate a small portion of the screen as a little trackpad, giving you a mouse pointer on iPhone or iPad without the need for extra hardware.

A new reader mode will also be part of the iPhone’s built-in magnification tool. The press release implies that it will be able to be mapped to the Action Button.

CarPlay is getting a few accessibility updates to bring it up to par with modern iOS and iPadOS accessibility features. This includes Voice control, Colour Filters and Sound Recognition.

For people that are hard of hearing Apple is also adding a new Music-to-haptics API that will allow iPhones to vibrate in tune with the music to help all people feel the beat. This will launch in Apple Music first, but Apple says other apps will be allowed to use the API.

Source: Apple

MobileSyrup may earn a commission from purchases made via our links, which helps fund the journalism we provide free on our website. These links do not influence our editorial content. Support us here.