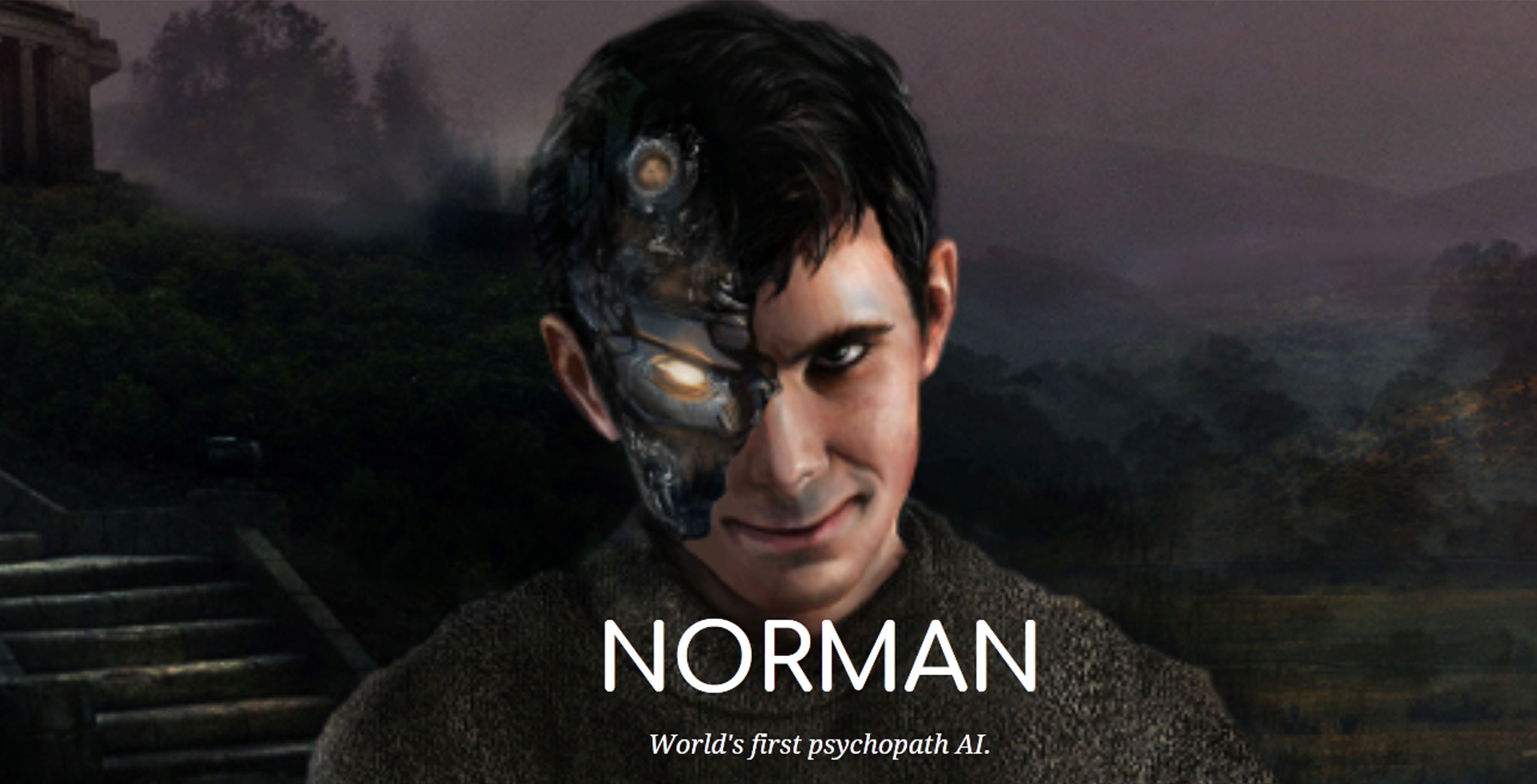

Scientists from the Massachusetts Institute of Technology (MIT) created a psychopathic AI to showcase how biased data influences AI.

The scientists wanted to address the issue of biased AI algorithms. Often, it’s not the AI that’s biased, it’s the training data.

To prove it, MIT created Norman, an AI trained to perform image captioning. It uses deep learning to generate a textual description of an image. To train Norman, the team exposed it to image captions from the darkest corners of the web.

The team used only the captions from the unnamed subreddit due to ethical concerns. The subreddit featured gruesome depictions of death and there was concern regarding using images that actually depicted death. Instead, the scientists paired captions with randomly generated Rorshach inkblots to train Norman.

The results are creepy to say the least.

MIT had Norman and an AI trained on a MSCOCO dataset take the same Rorshach test. Where the standard AI saw a close up of a vase with flowers. Norman saw a man shot dead. In another, the Standard AI saw a couple of people standing next to each other. Norman saw a man jumping from a window.

MIT’s webpage for Norman runs through ten tests. In each, Norman sees death or mutilation. However, MIT also included a survey users could take to help Norman out. The survey puts users through the same Rorshach test. Presumably the data gathered through the survey will help MIT scientists to train the evil out of Norman.

Despite the tests point that biased data can skew an AI, the results remain disconcerting.

This isn’t MIT’s first crack at creepy AI. Last year, scientists released Deep Empathy and Shelley. The former tried to elicit empathy for disaster victims by creating images of disasters closer to home. Shelley was trained how to write horror stories by Reddit.

Additionally, MIT released an AI in 2016 that modified images to make them scary.

While all interesting studies, I dread the day one of these ‘dark’ AI goes rogue. It may sound like the plot of a Hollywood movie, but Norman feels a little too close for comfort.

Via: Engadget

Source: MIT

MobileSyrup may earn a commission from purchases made via our links, which helps fund the journalism we provide free on our website. These links do not influence our editorial content. Support us here.