Roughly a year ago, Google unveiled a significant revamp of its Lens app that included real-time recognition and integrations with native camera apps. Now, it looks like Google is working on another significant revamp, including ‘recognition filters’ and more.

9to5Google decompiled the latest Google app APK — version 9.72 — from the Play Store and uncovered several pieces of unfinished code pointing to these new Lens features.

As with all APK teardowns like this, it’s essential to take things with a grain of salt. Some code could be misinterpreted, while other pieces might not see completion.

With that said, let’s dig into the teardown.

Lens could get translate, document, shopping and food filters

To start things off, Google reportedly began adding ‘filters’ for Lens in app version 9.61, but 9.72 includes the skeleton of an interface for selecting these filters.

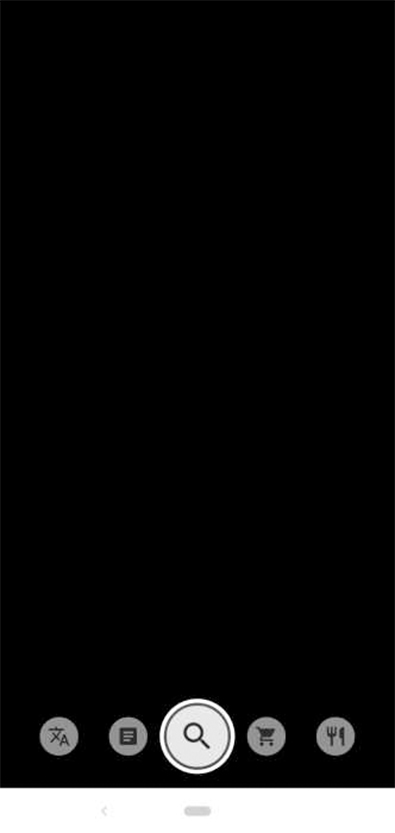

9to5 managed to enable the in-development interface, which has five icons in a carousel at the bottom of the screen. The middle symbol is a magnifying glass, likely denoting general search.

The other icons likely represent search filters, which could put Lens in a specific mode to focus on those tools. For example, the left-most icon looks similar to the Google Translate app. The ‘translate’ filter could tell Lens to concentrate on translating words into your language.

Additionally, 9to5 suggests Google could improve Lens’ translation feature. Instead of simply recognizing foreign text and offering a link to Google Translate, Lens could ‘auto-detect’ a language and translate it from the ‘source’ to the ‘target’ text.

Beside the translate filter is an icon that looks like a document. This could be for some kind of document scanning tool. On the right side of the carousel are ‘shopping’ and ‘food’ icons. The former could be for identifying clothes, furniture and other products you may want to purchase, while the latter could be an AR feature for finding nearby restaurants.

Regardless what the filters do, the point seems to be about refining search results based on what the user wants to know, instead of Google’s AI taking a best guess based on what the camera sees.

As for when we can expect these new features to come to Lens, I think Google will announce them at I/O 2019. In other words, we’ll likely see them after I/O, in the summer sometime.

Source: 9to5Google

MobileSyrup may earn a commission from purchases made via our links, which helps fund the journalism we provide free on our website. These links do not influence our editorial content. Support us here.