Intel’s newest desktop central processing units (CPUs) have arrived, boasting improved performance and higher speeds, despite still using the company’s ageing 14nm architecture.

There’s a lot of questions around Intel’s new processors, first and foremost being whether these new CPUs can fend off AMD’s excellent 7nm Ryzen chips.

However, for the purposes of this overview, we chose to explore a different avenue of Intel’s new processors, in part because the ‘Intel vs. AMD’ debate has been tackled by several other outlets already and in part because at the moment, we don’t have the means to test out the Ryzen hardware. Instead, I chose to approach Intel’s new products from the perspective of someone who already runs Intel in their PC and might be considering an upgrade.

One thing that stood out to me about Intel’s new CPUs is that the company kept comparing them to previous generations of Intel CPUs. If you look back to the 10th Gen Core announcement post, Intel kept comparing performance against three-year-old PCs with Intel hardware. The argument was that people typically upgrade once every three to four years.

It makes sense, and also put me squarely in the target demographic with a four-year-old Intel PC that was in need of an upgrade. So when Intel sent over some of the new 10th Gen CPUs to test, I thought it’d be a great opportunity to see how a CPU upgrade would directly benefit me, everything else being equal. If you’re curious about how that went, check out the full story here.

This piece, on the other hand, will focus almost exclusively on the new CPUs and whether they’re worth an upgrade for the average gamer who also uses their PC for light video and photo editing.

What’s inside Intel’s 10th Gen Core CPUs

The first CPU I tested was the i5-10600K, which boasts six cores and 12 threads, a base frequency of 4.1GHz and a max turbo frequency of 4.8GHz.

To me, this seemed like almost the perfect direct upgrade path choice for what I was currently running, a 6th Gen Core i5-6500. The 6500 launched back in 2015 and I picked one up in 2016 when I built my PC, largely on the advice that an i5 would be “more than enough” for gaming. Spoiler: it was not. Interestingly, the 6500 also operates on the 14nm architecture.

The other CPU I tested was the Core i9-10900K, the flagship of Intel’s new products. The 10900K sports 10 cores and 20 threads, a 3.7GHz base frequency and a 5.3GHz max turbo frequency. Further, the i9 has a 5.3GHz Intel Thermal Velocity Boost (TVB) frequency. TVB can automatically increase clock frequency above the single-core and multi-core turbo boost based on how much the CPU is operating below max temperature and power budget.

Additionally, Intel’s Turbo Boost Max 3.0 technology automatically detects the best performing core on a processor and pushes it harder. However, when running in this mode, the i9’s max frequency becomes 5.2GHz.

Both 10th Gen CPUs run on the 14nm architecture and sport 125W TDPs.

Significant performance uplift

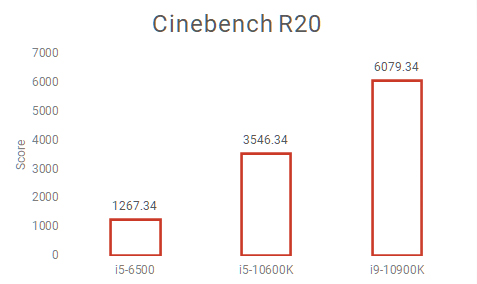

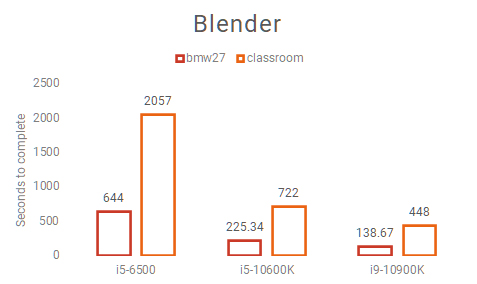

While those numbers are impressive, numbers are just numbers until you put them to the test. I ran my old i5-6500 through a gamut of benchmarks alongside the 10th Gen i5 and i9 to see just what those numbers can do.

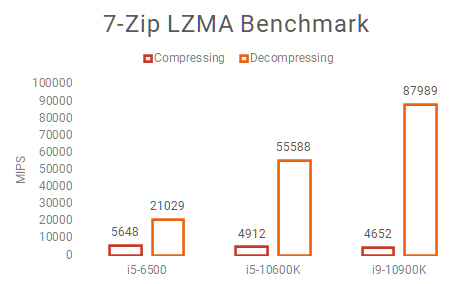

Testing included four CPU benchmarks; Cinebench R20, two Blender tests (bmw27 and classroom) as well as running 7-Zip’s LZMA benchmark tool for five minutes. It measures the speed of compressing and decompressing in million instructions per second (MIPS).

Additionally, I ran a few GPU benchmarks to test what impact, if any, the new CPUs had on GPU performance. I also tested some games, both through built-in benchmarks and in actual gameplay, to see what benefits the 10th Gen i5 and i9 brought to the table.

The test bench used for the benchmarks included 16GB of DDR4 RAM, an AMD Radeon 5600 XT GPU and a 128GB SSD to boot Windows 10 and the benchmarks. It’s worth noting that some of the game tests ran off hard drives instead of SSDs, but that shouldn’t significantly impact the performance metrics I measured. Additionally, due to a change in socket type, the i5-6500 uses a different motherboard than the 10th Gen CPUs. Again, it shouldn’t significantly impact performance scores, but since anyone making the leap to a 10th Gen will have to upgrade their motherboard, any performance benefit will count in the CPUs’ favour.

Unsurprisingly, the CPU benchmarks showed a massive gain in performance. In Cinebench, the 10600K more than doubled the score of the ageing 6500. While the 10900K did beat out the 10600K by a large margin, it didn’t quite double the 10th Gen i5’s score.

As for Blender, once again the new CPUs benched much better. The 6500 took forever to complete both benchmarks, sitting at almost 11-minutes to complete the bmw27 test and over 35-minutes to do classroom.

However, the 10600K and 10900K completed bmw27 in about three minutes and 45 seconds and two minutes and 18 seconds respectively.

The classroom test also saw impressive results from the 10th Gen i5, which completed it in just over 12-minutes while the i9 finished in almost seven and a half minutes.

Finally, the 7-Zip benchmark revealed an interesting twist with the performance. The MIPS rating for compression fell in each test, with the Core i9 scoring lowest at 4652 MIPS, almost a thousand below the 6500. Based on the 7-Zip benchmark page, this discrepancy likely has to do with RAM latency, which can significantly impact compression testing.

Decompression, on the other hand, isn’t impacted by RAM as much. It better measures the advantages of things like CPU architecture and hyper-threading. The test results showed huge gains in MIPS from 21,029 on the 6500 to 87,989 on the 10900K.

Diminishing returns

However, synthetic benchmarks are only part of the story. In a real-world use environment, I found that the 10600K felt like a marked improvement over the 6500. However, the i9 didn’t feel significantly faster than the 10th Gen i5 despite clearly benching better.

Whether I was browsing the web, editing photos or doing other day-to-day tasks, my desktop felt significantly snappier on 10th Gen Intel hardware. Moving large files around, such as pulling RAW photos off my camera, or saving images in Photoshop felt instant whereas the 6500 seemed sluggish by comparison.

Ultimately, the 10900K didn’t feel like a significant step up over the 10600K in typical use. However, I’d also argue that my typical use likely doesn’t significantly stretch the i9’s legs. If you find yourself picking between the two, strongly consider what you’ll be doing with your computer. The jump from the $399 10600K to the $749 10900K likely won’t be worth the cost if your use case doesn’t take full advantage of the CPU.

If you’re a gamer, however, your consideration may change.

Depending on what you play, your CPU could make a huge difference

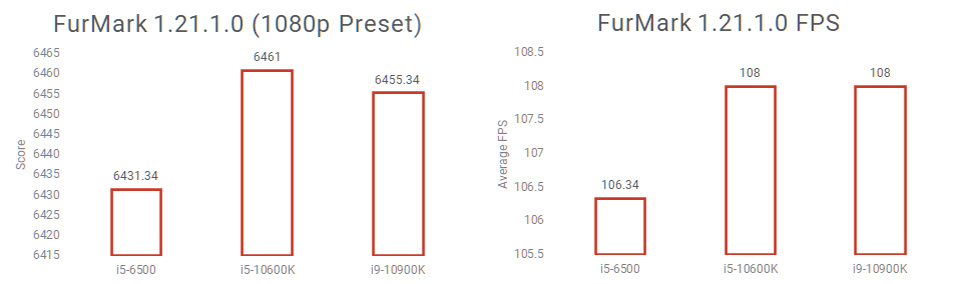

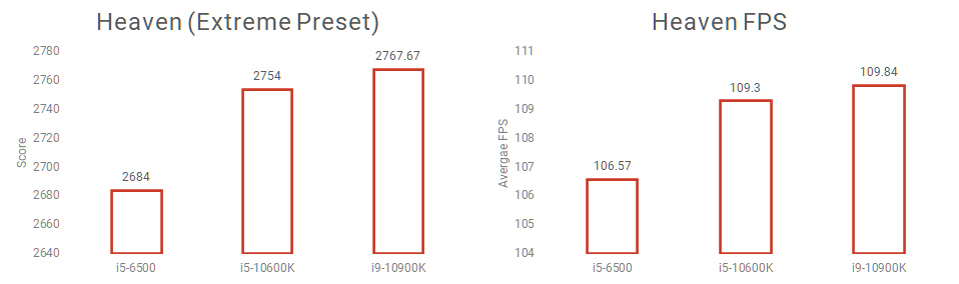

Let’s kick things off with a look at some synthetic GPU benchmarks. Unsurprisingly, the CPUs didn’t significantly impact the outcome of tests designed to stress the GPU.

First up, I ran FurMark verison 1.21.1.0 with the 1080p preset, which saw scores over 6400 points on all three CPUs, with a slight gain of less than two frames per second (fps) between the older 6th Gen and the newer 10th Gen CPUs, which both registered 108fps averages. Interestingly, the 10900K scored six points lower on average than the 10600K, but I don’t think that’s indicative of a difference in performance between the two. If anything, it could be due to the i9’s lower base clock.

Similarly, the Heaven benchmark on the Extreme preset saw a score jump from 2684 on the 6500 to 2754 on the 10600K. It jumped again to 2767.67 on the 10900K. Framerates also slightly increased from the 6500 to 10600K but didn’t see a significant improvement to the 10900K.

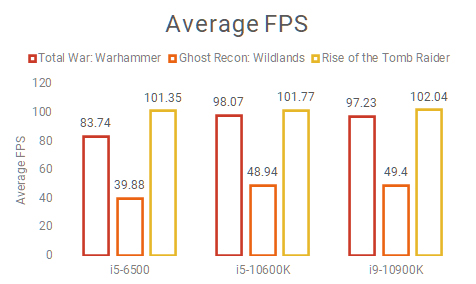

In the first round of game tests, we used each game’s built-in benchmarking tool. Plus, every game tested ran at the highest settings preset. Interestingly, both Total War: Warhammer and Ghost Recon: Wildlands saw the biggest jump going from the 6500 to the 10600K, an improvement of about 10-15fps. Rise of the Tomb Raider on the other hand maintained consistent averages despite the CPU.

In all three tests, the 10900K saw about a one-frame difference in average performance. The biggest factor here would be the CPU usage, which was consistently lower on the 10th Gen processors than the 6500. In other words, we were bumping up against the upper limits of the 5600 XT, but the good news here is that both 10th Gen CPUs — especially the 10900K — should have lots of headroom for future gaming.

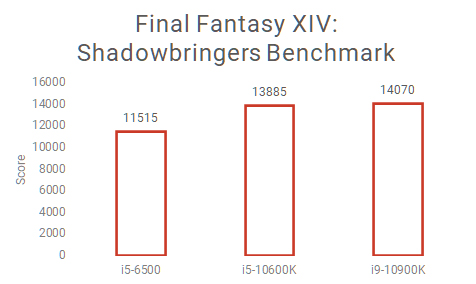

The Final Fantasy XIV: Shadowbringers benchmark assigned a score based on performance instead of providing the average fps like the others. While ultimately less informative by comparison, it does indicate that the 10900K can provide some significant performance increase in some titles.

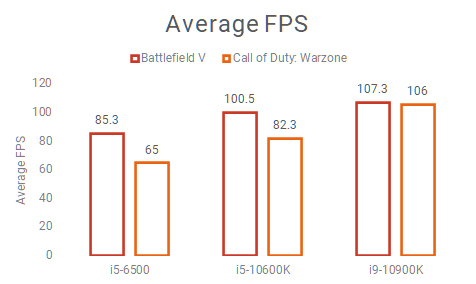

However, the actual gameplay tests I ran were the most telling. In both Battlefield V and Call of Duty: Warzone, the 10900K made a significant difference in gameplay. The caveat here is that unlike the other games with a preset, repeatable benchmark, neither BFV or Warzone offered that. Instead, I recorded the average framerate I got over a few sessions of a given game mode. The downside to this is it could mean variance in results from things like, for example, an easier to render map or a smaller player count. Still, I think the results are telling for both.

BFV saw about a 15fps increase from the 6500 to the 10600K and another 7fps to the 10900K. The most interesting factor here is that the 6500 saw about 90+ percent CPU usage throughout the session while the 10600K hovered around 60-70 percent usage. The 10900K was by far the lowest usage at around 40 percent.

While I wasn’t able to monitor CPU usage data in Warzone, the game also saw significant benefits from upgraded processors, with about a 15fps jump in fps between each CPU.

Both of these games have very high player counts, with BFV offering up to 64 gamers in a match while Warzone can have up to 150. That increases load on the CPU significantly (for example, past tests I’ve done in BFV with the older hardware saw much higher fps in single-player modes than multiplayer with the only real difference being number of players).

Consider your options

Ultimately, I think there are a few takeaways from the above results. On the one hand, making the switch from an older CPU to a newer one can bring some significant benefits both for productivity and gaming. However, temper your expectations — a new CPU won’t have nearly the same impact as, for example, a new GPU [link to companion 5600 XT story].

Further, the impact a new CPU has is heavily dependent on the game and other factors like the hardware it’s paired with. Your mileage in games may vary if you’re running more powerful graphics hardware, playing more CPU intensive games or if you’re trying to achieve higher resolutions than 1080p. I opted to test at 1080p since that’s what the majority of gamers still choose to play at.

If 1080p is your goal, the 10600K will likely be more than enough CPU for you, plus it will have some overclocking room if you’re into that. However, depending on how future games take advantage of things like higher CPU speeds and increased core counts, the 10600K may struggle in the future.

The 10900K is certainly a more futureproof option but at $749 is an expensive one. If you do streaming, video editing or any other tasks along with gaming, the extra cost may be well worth it the benefits it’ll bring in those areas. And while I didn’t get to test it, the Core i7-10700K could serve as an excellent middle ground between the two options at $579.

Performance at what cost

Aside from the upfront price, there are other costs to consider with Intel’s 10th Gen products. Although the company integrated a new thermal solution that helps with temperatures, I still found the CPUs ran a bit on the hot side.

The 10600K fared much better and stuck to around 50 degrees Celsius even in the more intense benchmarks. The 10900K pushed up to 70 degrees, the hottest I’ve ever seen in my own testing, but far from the hottest out there (my old GPU regularly hung out at 80 degrees). The 10900K is also a power-hungry chip with a peak power draw over 300 watts.

Ultimately, before you make any decision regarding a 10th Gen Intel CPU, make sure you compare against AMD’s Ryzen offerings as well. In many cases, Ryzen offers comparable or better performance for less. A Ryzen 9 3900X is currently on sale for $649 with 12 cores, 24 threads.

While the 3900X’s max boost isn’t as high at 4.2GHz, it draws less power and the extra cores help with performance in productivity workloads. In gaming, Intel will likely continue to lead the way.

Intel’s newest CPUs are good, but the company seems to have milked 14nm for all it’s worth now. It remains to be seen if Intel can push past the architecture next year, or if it will find a new way to squeeze 14nm.

MobileSyrup may earn a commission from purchases made via our links, which helps fund the journalism we provide free on our website. These links do not influence our editorial content. Support us here.