Google announced several new features and improvements coming to its Search products during Google I/O, the company’s annual developer conference.

During the opening I/O 2021 keynote, the search giant shared details about its new ‘MUM,’ new Lens and AR features. First up, Google Search is set to get smarter with the launch of a new artificial intelligence model dubbed ‘MUM’ that can better understand the intent behind a search query.

The Multitask Unified Model, or MUM, is a follow-up to 2019’s Bidirectional Encoder Representations from Transformers (BERT), another AI model that helped improve Google’s understanding of search queries. Google says MUM was built on the same architecture as BERT, but is 1,000 times more powerful. Plus, as the name implies, MUM can multitask.

MUM can understand and generate language and is trained across 75 different languages. This, combined with its ability to manage multiple tasks, allows it to better understand information and knowledge than BERT. Google also touts MUM as multi-modal, which means it can understand and transfer information across text and images. In the future, Google says MUM will be able to do the same with video and audio.

Google says its testing MUM internally. However, when it does bring MUM to Search, users can expect it to transform their queries. The search giant used conversational queries as an example of how MUM could shine, noting that the model better understands complex, conversation-like questions, which allows it to surface better answers. Moreover, MUM can draw information from multiple sources and transfer insights from multiple languages into the one a user searches in.

Finally, Google suggested MUM would be able to take multiple modes of search into account, combining a text question with, for example, a related picture. For example, a user could query Google if their current hiking boots would be adequate for a specific mountain, and include a picture of said boots that MUM could use to compare with online information to provide an answer.

Clarifying results, Lens and AR

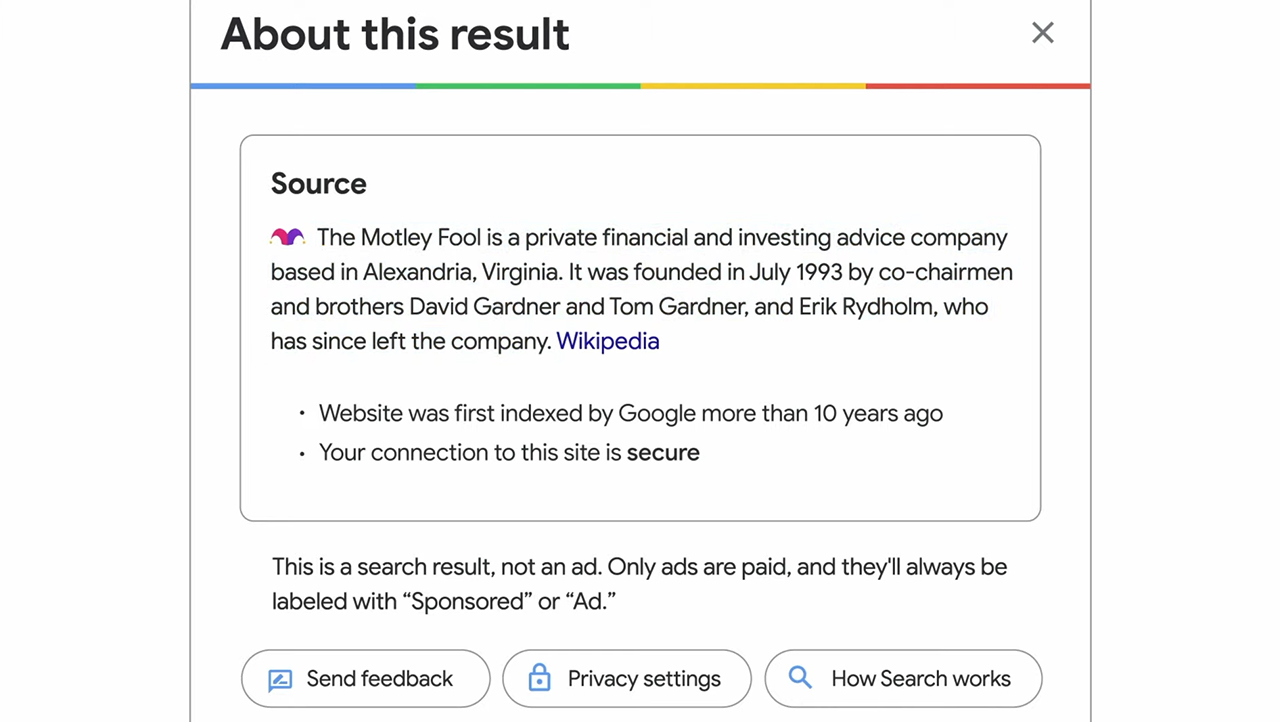

Along with improving Search, Google announced improvements to how Search shows the source of information. Dubbed ‘About This Result,’ the feature will surface details about the source of information, such as details of the website it came from, a description of the website, when Google first indexed it and whether the website offers a secure connection.

Google plans to roll out About This Result to all English results worldwide this month, with more languages coming later this year. Further, Google says it will add more details to About This Result in the future, including information about how a site describes itself, what other sources say about it and related articles for searchers to look at.

The company also plans to improve Google Lens and augmented reality (AR) search with new features. For Lens, Google will update the Translate filter to allow users to copy translated text, listen to it or search for the translated text. This change comes as Google saw over three billion visual searches on Lens each month in 2021.

As for AR Search, Google plans to expand the feature to include athletes. Currently, users can look up various animals, science diagrams and more with Search and use the built-in AR feature to place a 3D model of the item in their space. With the addition of athletes, users will be able to get an up-close look at complex sports moves from gymnasts, soccer players, skateboarders and more.

The new AR features will launch on May 18th in the Google app on Android and iOS, and also in Google Search in supported browsers on supported devices.

Image credit: Google

MobileSyrup may earn a commission from purchases made via our links, which helps fund the journalism we provide free on our website. These links do not influence our editorial content. Support us here.