Meta and its Reality Labs have been busy at work trying to figure out what it takes to build next-generation displays for its virtual/augmented/mixed reality headsets.

Current VR systems provide the user with an immersive experience that offers a sense of being in a different place, but according to Meta CEO Mark Zuckerberg, we still have a long way to go until we achieve visual realism.

The displays that eventually match the full capacity of the human vision are going to unlock interesting VR experiences, but getting there is a long haul.

During a recent Meta briefing, Zuckerberg explained how human vision is very complex and deeply integrated, and to replicate that on a screen isn’t enough — we need other visual cues to get a feeling of immersion.

“You need stereoscopic displays to create 3D images. You need to be able to render objects and focus your eyes at different distances, which is different from a traditional screen or display where you only need to focus it at one distance, where you’re holding your phone or your monitor is,” said Zuckerberg while talking about the challenges of developing realistic and immersive VR experiences.

He went on to mention that you need displays that can approximate the brightness and dynamic range of the real world, realistic motion tracking, and a graphics pipeline to get the best performance out of the in-device CPU and GPU without making it run too hot.

Finally, all of those elements need to be integrated into a compact machine that is lightweight and comfortable to wear. “If any of these pieces aren’t implemented well, it breaks that feeling of immersion. And you really feel that way more than you would on a typical 2D screen today,” said Zuckerberg.

For a VR machine to be indistinguishable from what we see with our eyes, it needs to pass a “Visual Turing Test,” and no current VR technology has been able to do so.

To pass the visual Turing test, Reality Labs Research’s Display Systems Research (DSR) team is developing a new technology stack that includes:

- Varifocal technology: Ensures the focus is correct and enables clear and comfortable vision within arm’s length for extended periods of time.

- Distortion correction

- Resolution that approaches or exceeds 20/20 or 6/6 human vision

- High dynamic range (HDR) technology that expands the range of colour, brightness, and contrast in VR

Meta’s DSR has developed four prototypes that aim to provide solutions to the above-mentioned hurdles.

Half Dome Series

The Half Dome series tackles the Varifocal aspect of creating an immersive experience.

Back in 2018, DSR expanded the field of view of the Half Dome 1 to 140 degrees, and focused on ergonomics and comfort on the Half Dome 2 by making the headset’s optics smaller, and reducing the overall weight of the device by 200 grams.

Then in 2019, with the Half Dome 3, DSR applied electronic varifocal to the headset, replacing the Half Dome 2’s moving mechanical parts with liquid crystal lenses, resulting in a further decrease in the headset’s form and weight.

According to Meta, for varifocal to work as intended, optical distortion needs to be further addressed. “The correction in today’s headsets is static, but the distortion of the virtual image changes depending on where one is looking. This can make VR seem less real because everything moves a bit as the eye moves,” reads the company’s press release about the development of said prototypes.

According to Michael Abrash chief scientist at Meta’s Reality Labs, “The problem with studying distortion, though, is that it takes a really long time — fabricating the lenses that you need to study the problem can take weeks or months, and once you have them, you still have a long process of building a functional display system with them.”

DSR developed a VR distortion simulator that employs virtual objects and eye-tracking to simulate the distortion seen in a headset for a specific optics design and displays it using 3D TV technologies, allowing the team to study different optical designs and distortion correction algorithms without having to develop an actual headset.

Butterscotch

For Meta to create a VR technology that is immersive and uber-realistic, it needs to achieve a resolution that can match the human eye, and that means getting up to about 60 pixels per degree in the display.

Screens around us today, including our TVs and phones, have long surpassed the 60 pixels per degree benchmark, which means that they can replicate 20/20 or 6/6 vision, but creating this in a compact headset has been a challenge.

“VR lags behind because the immersive field of view spreads available pixels out over a larger area, thereby lowering the resolution. This limits perceived realism and the ability to present fine text, which is critical to pass the visual Turing test,” reads Meta’s release.

To achieve near retinal resolution, DSR reduced the field of view to around half that of the Quest 2, designed a new hybrid lens and deployed it to a prototype called “Butterscotch.”

Butterscotch is “nowhere near shippable,” but excels in demonstrating how much of a difference increased resolution made in providing a realistic VR experience.

Starburst

“While resolution, varifocal, and distortion all make a meaningful contribution to realism, arguably the most important dimension of all is high dynamic range or HDR,” said Zuckerberg.

HDR is the overall brightness and contrast of a display. According to Zuckerberg, the vividness of screens that we have now compared to what your eye sees in the physical world is off by an order of magnitude. The key metric for HDR is nits, which depicts how bright a display can go. Traditional TVs can go upwards of a few thousand nits, but in VR, the maximum nit level right now is about 100, and that is on the Quest 2.

“We’re going to need to get to significantly higher brightness levels than what we refer to as HDR on traditional screens today,” said Zuckerberg. “And then of course, the challenge is we need to do that in something that is battery powered and comfortable to wear.”

Starburst is a prototype HDR headset, that, although is nowhere near shipping condition, can produce a full range of brightness typically seen in indoor or nighttime environments.

The bulky prototype reaches 20,000 nits of brightness and is the first HDR VR system. “We’re using it to test and for further studies so we can get a sense of what the experience feels like,” said Zuckerberg. The aim with Starburst is to research and study how HDR would help in hyper-realistic VR experiences, and eventually, shrink it all into a compact form headset that is shippable.

Holocake 2

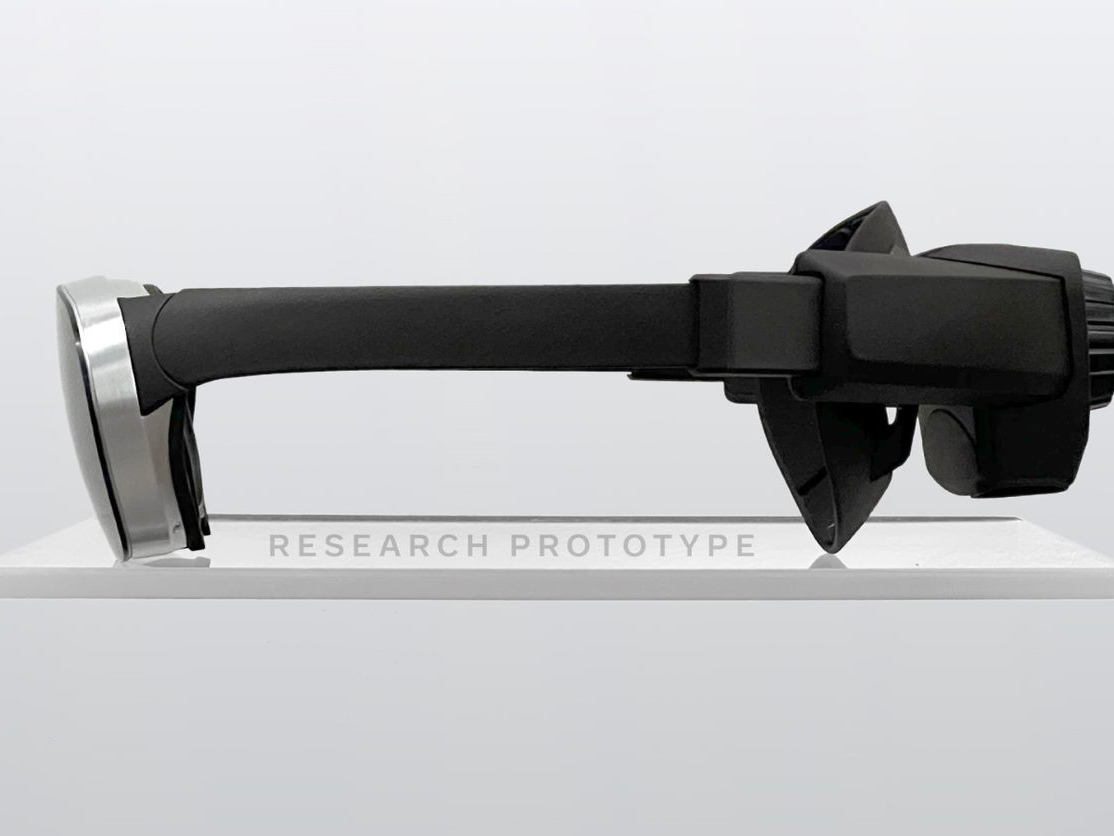

Holocake 2 is an experimental device, which Meta says is the thinnest and lightest VR headset it has made to date, and can run any existing PC VR title.

“In most VR headsets, the lenses are pretty thick and they have to be positioned a few inches from the display so they can properly focus and direct light directly into your eyes,” said Zuckerberg. “And this is what gives headsets that look where they’re pretty front heavy.”

To get around the thick form factor issue, Meta had to alter the headset’s lenses.

Instead of shining light through a lens, the Holocake 2 shines the light through a holograph of a lens. Further, the Holocake 2 uses polarization-based optical folding (pancake optics) to reduce the overall gap between the display panel and the holographic lens, resulting in a headset with a much more compact form factor.

“This is our first attempt at a fully functional headset that leverages holographic optics, and we believe that further miniaturization of the headset is possible,” reads Meta’s press release.

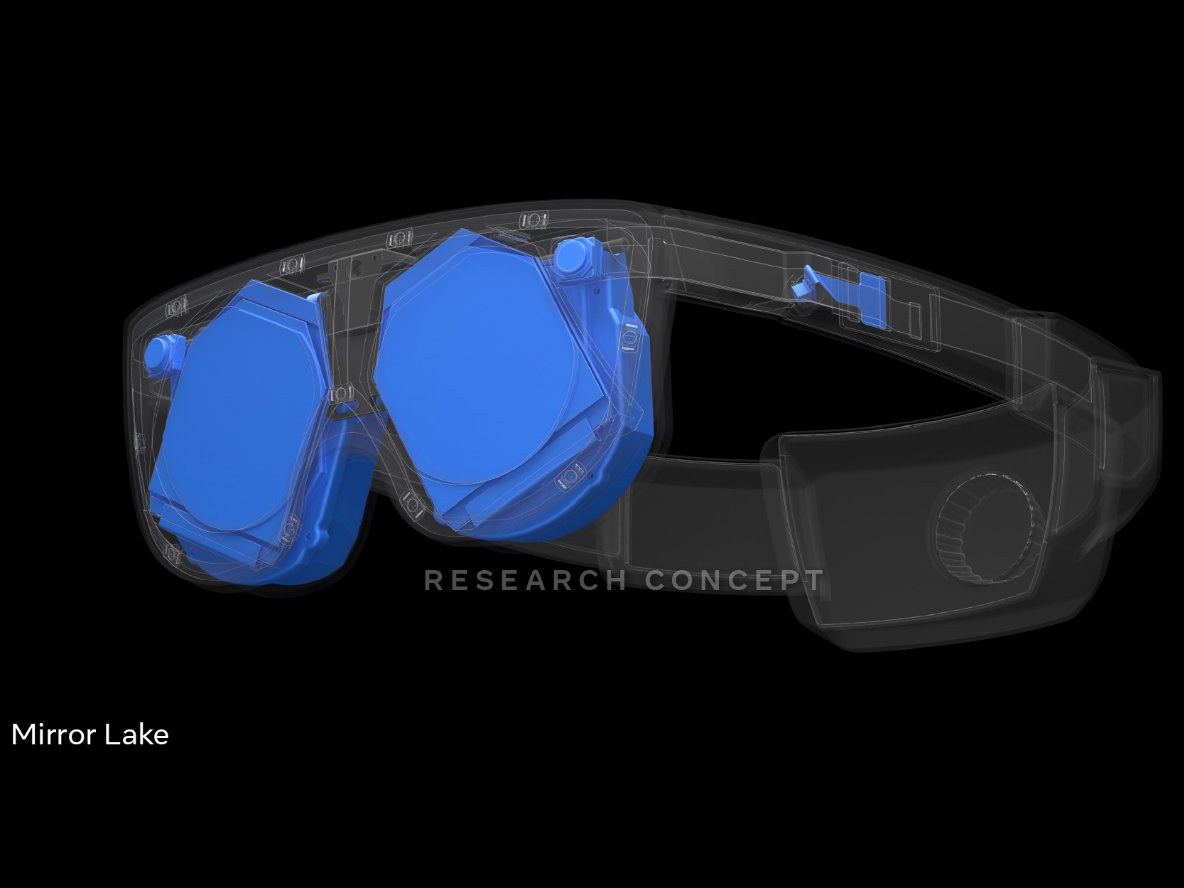

The ultimate goal is for Meta to combine the technical prowess of all the above-mentioned prototypes into one compact device that can pass the Turing test, and “Mirror Lake,” is one of several potential pathways to that goal.

Well, it’s more of a concept idea, than an actual physical prototype. “Mirror Lake is a concept design with a ski goggle-like form factor that takes the Holocake 2 architecture and then adds in nearly all of the advanced visual technologies that we’ve been incubating over the past seven years, including varifocal and eye-tracking,” said Abrash.

Everything in the headset is thin and flat. The varifocal technology deployed is flat, and so are all the holographic films used for Holocake. “t’s easy to keep adding thin, flat technologies. This means that the end product can pack more functionality into a smaller package than anything that exists today,” said Abrash.

MobileSyrup may earn a commission from purchases made via our links, which helps fund the journalism we provide free on our website. These links do not influence our editorial content. Support us here.