At its annual Google Search On event, Google showcased its efforts to make Search more natural and intuitive for users, with the company’s theme being to ‘search outside the box,’ and ‘Organize the world’s information and make it universally accessible and useful.’

The tech giant outlined about upcoming developments for Google Search, Google Maps and Google Shopping.

Google Search

Multi-Search Near Me: Announced at Google I/O 2022, the multi-search near me feature allows users to take photos of objects like food, supplies, clothes and more, and add the phrase “near me” in the Google app to get search results showcasing local businesses, restaurants or retailers that carry that specific item. Google runs the image through its database and cross-references it with several photos, reviews and web pages to deliver accurate nearby results. The feature is rolling out in English in the U.S. this fall.

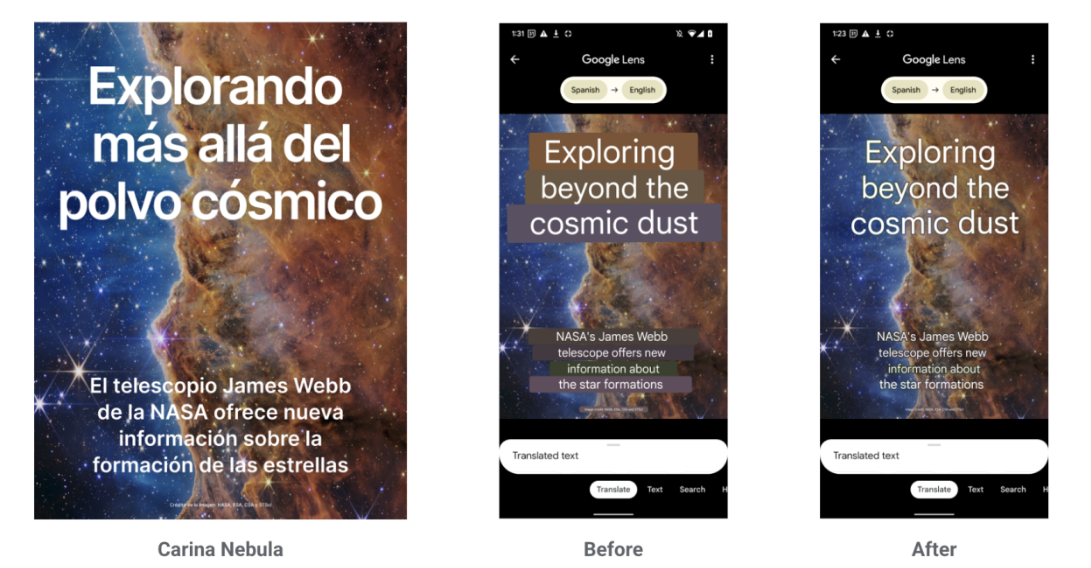

Translate with lens: According to Google, translations with Google Lens are used roughly one billion times per month in more than 100 languages. Originally, the way the feature worked was that it would translate the text on an image and add it on top of the photo as extra text. This would block or distort the image behind the text. Now, with a new machine learning tool called ‘Generative Adversarial Networks’ (the same tool used in Pixel phones for Magic Eraser), Google Lens can translate the text, and blend it back into the background image without distorting it, making the image retain its natural look. See the screenshot below for reference. ‘Lens AR translate’ is rolling out globally later this year.

Shortcuts: Launching today, September 28th, you’ll see some of Google’s most helpful tools and shortcuts, including translate, ‘shop your screenshots,’ ‘hum to search,’ and more, displayed right on top of the Google app (under the Search bar). The shortcut feature is rolling out for the iOS application in English in the United States.

Easier to ask questions: Google is making it easier for users to ask the questions they intend to ask. When you start typing a query, Google will now suggest keywords or topics that might help you better craft your questions. “Say you’re looking for a destination in Mexico. We’ll help you specify your question — for example, ‘best cities in Mexico for families’ — so you can navigate to more relevant results for you,” says Google. The highlighted keywords, and the subsequent results will be relevant to your query, and will include content from creators available online. “For topics like cities, you may see visual stories and short videos from people who have visited, tips on how to explore the city, things to do, how to get there and other important aspects you might want to know about as you plan your travels,” says Google.

Additionally, the search results you’ll see will come in various formats. Depending on the relevancy of your query, the results can be shown as text, images, videos or a combination of those formats. This new format of asking questions on Google Search will roll out in the U.S. in the coming months.

Google Maps

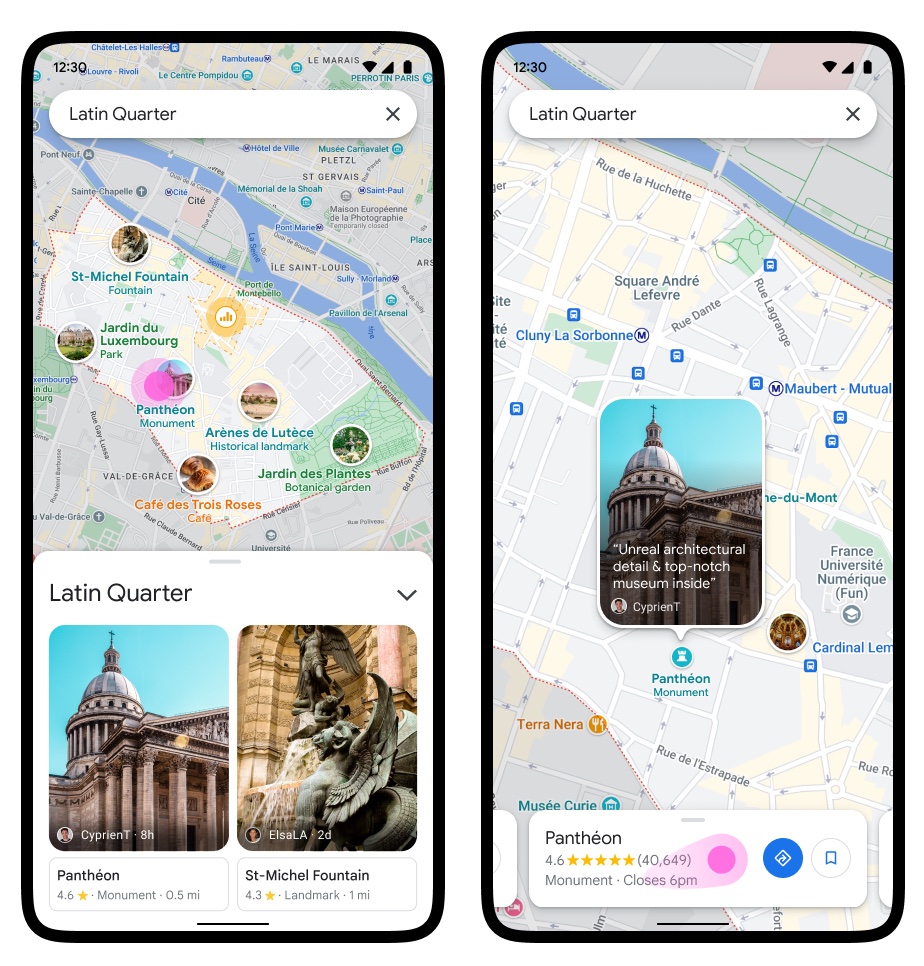

Google intends to make Maps more visual and immersive, and it is starting off with new features that aim to make you feel like you’re physically in the location that you are searching.

Neighbourhood Vibe: Neighbourhood vibes allow you to quickly check popular locations in a given area, like famous restaurants, cafes, museums and other landmarks that people search for. Results appear alongside helpful photos and information right on the map. The feature is launching globally on Android and iOS in the coming months.

Landmark Aerial View and Immersive view: The upcoming feature allows users to see photorealistic aerial views of up to 250 key global landmarks like the CN Tower, Eiffel Tower, Tokyo Tower and more. Being able to check out the aerial view allows you to also soak in the landmark’s surroundings, making it easy for you to decide whether you want to visit said location or not.

Immersive View, on the other hand, works in tandem with Aerial View, and allows users to plan their trip ahead of time by checking what the weather, traffic and crowd will be like at a given place on a given day and time.

For example, you may search for the CN Tower in Toronto and see that finding parking around the landmark is a pain, so you decide not to go or take public transport.

Landmark Aerial View is rolling out globally on Maps today, September 28th, whereas Immersive View is launching in Los Angeles, London, New York, San Francisco and Tokyo in the coming months.

Live View: First released three years ago, Google’s Live View is an Augmented Reality tool that overlays walking directions over your live camera feed. All you need to do is simply lift and point your phone to find directions, and essential places like shops, ATMs and restaurants. The feature has long been available in Canada, and will start rolling out in London, New York, Paris, San Francisco and Tokyo in the coming months.

Eco-Friendly Routing expanding to developers: Google launched its Eco-Friendly Routing feature for Google Maps in Canada earlier this year. The feature allows users to analyze the estimated carbon emissions of their planned route and suggests alternative directions that would consume less fuel, while taking traffic, road steepness and other variables into account.

Developers from companies will now have the option to add the feature to their company apps, allowing companies like SkipTheDishes, Uber Eats, and Amazon delivery drivers to select their engine type and find best routes and directions for fuel and energy efficiency. The feature will be available in Preview later this year for developers in the U.S., Canada and select countries in Europe where Eco-Friendly Routing is available.

Google Shopping

Google is introducing new tools for Shopping that aim to help users discover things they’ll like by providing visual cues, insights about products and services, and filters to trim down options.

3D shopping for shoes: Some websites allow users to take a 3D look at shoes from different angles. According to Google, people engage with 3D images almost 50 percent more than static ones.

Websites need to have this feature built-in, and some of the smaller businesses don’t have the resources to do that. This is where Google comes in. The company says it can now automate 360-degree spins of shoes using just a handful of photos, essentially the ones already available on the merchant’s website, allowing users to check out shoes in 3D view, regardless of the page offering the feature. This feature will be available in Google Shopping in the U.S. in early 2023.

Shopping Insights: The new feature will provide noteworthy insights about the product category you’re browsing for. Google says insights will be gathered from a “wide range of trusted sources,” and compiled in one place. For example, if you’re searching for mountain bikes, Google will provide insights about the size of the bike you should be riding, information about brakes and suspension, popular trails around you and more.

The feature is rolling out for Google Shopping today, September 28th.

Dynamic shopping filters: Normally, filters allow you to sort results by price and relevancy. With new shopping filters on Google, users would be able to shop categorically. For example, if you’re looking for jeans, you’ll be able to sort them by ‘skinny,’ wide leg,’ ‘boot cut,’ and more. Similarly, for T-shirts, filters such as round neck, crew neck, baggy, and more would allow you to tailor the Google Shopping experience according to your needs.

Dynamic Filters for Google Shopping will be “available soon” in select countries in Europe.

MobileSyrup may earn a commission from purchases made via our links, which helps fund the journalism we provide free on our website. These links do not influence our editorial content. Support us here.