OpenAI is taking its user privacy seriously.

The company announced today that ChatGPT users can now turn off their chat history, allowing users to choose whether or not their conversations can be used to train OpenAI’s language models.

According to the company, conversations that are started when chat history has been disabled won’t be used to train the company’s models, and they won’t even appear in the history sidebar.

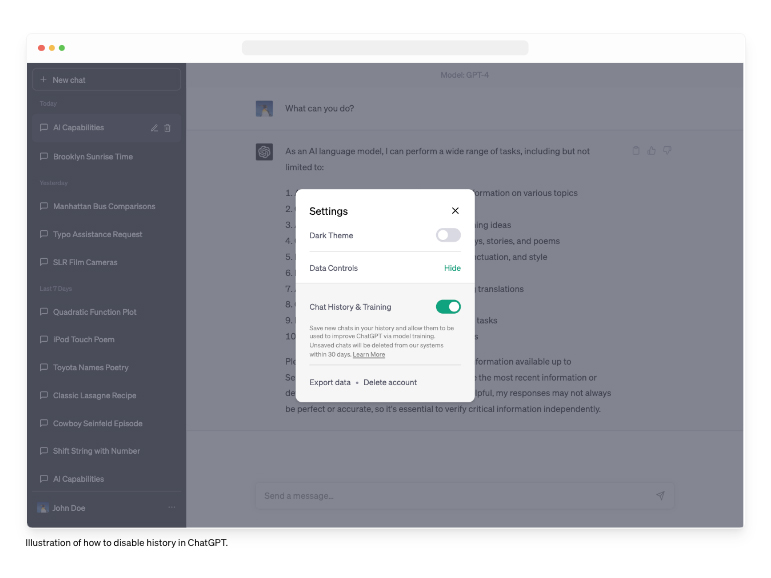

The control is rolling out now, and can be toggled on/off in ChatGPT’s settings.

OpenAI says even with chat history disabled, it will retain user chat data for 30 days to review them in cases where needed. After the thirty-day period, OpenAI will permanently delete the data.

OpenAI says even with chat history disabled, it will retain user chat data for 30 days to review them in cases where needed. After the thirty-day period, OpenAI will permanently delete the data.

In addition to the chat history feature, OpenAI is also working on a new ChatGPT Business subscription that offers users more control over their data, alongside aiding enterprises “seeking to manage their end users.” The new subscription will be available “in the coming months.”

Lastly, OpenAI is also introducing a new ‘Export’ option that would allow users to export their ChatGPT data and “understand what information ChatGPT stores.” When you export your data, you’ll receive a file with your conversations and all other relevant data in an email.

Image credit: OpenAI

Source: OpenAI

MobileSyrup may earn a commission from purchases made via our links, which helps fund the journalism we provide free on our website. These links do not influence our editorial content. Support us here.