StabilityAI, the research group behind the Stable Diffusion AI image generator, is releasing the first of its StableLM suite of Language Models.

The new open-source language model is called StableLM, and it is available for developers on GitHub. “Developers can freely inspect, use, and adapt our StableLM base models for commercial or research purposes, subject to the terms of the CC BY-SA-4.0 license,” wrote StabilityAI in a blog post.

StableLM works similarly to OpenAI’s ChatGPT and is trained to generate text and code. It is trained on a larger version of the open-source dataset known as the Pile. “The richness of this dataset gives StableLM surprisingly high performance in conversational and coding tasks, despite its small size of 3 to 7 billion parameters (by comparison, GPT-3 has 175 billion parameters),” wrote StabilityAI.

In addition to StableLM, StabilityAI is also releasing research models “that are instruction fine-tuned,” and use a combination of five recent open-source datasets for conversational agents: Alpaca, GPT4All, Dolly, ShareGPT, and HH.

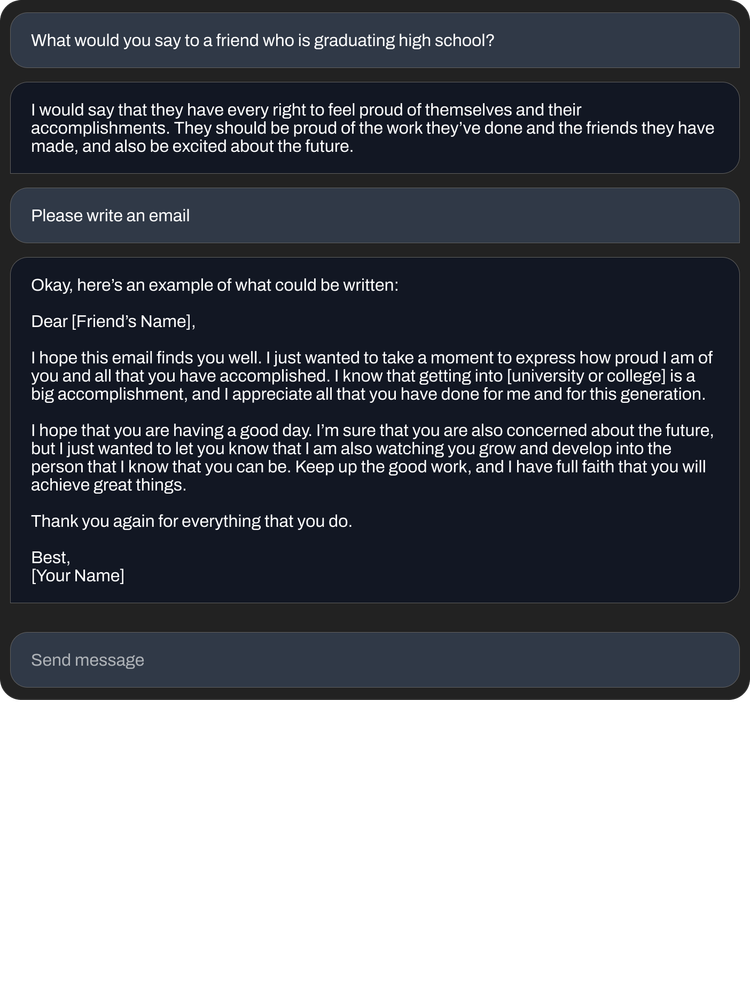

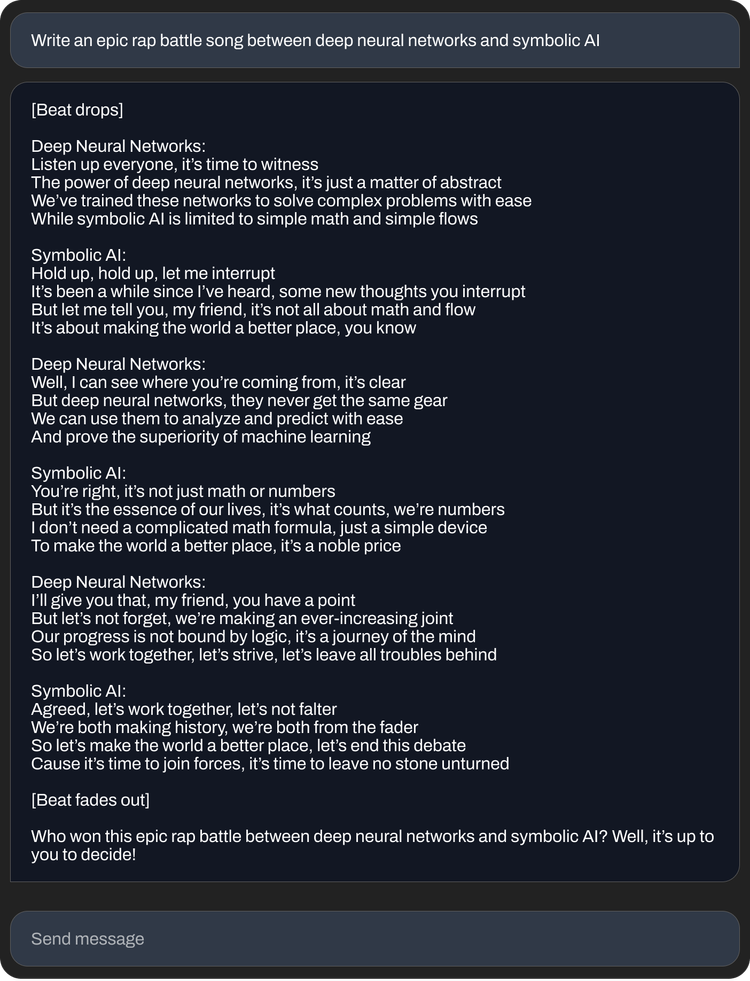

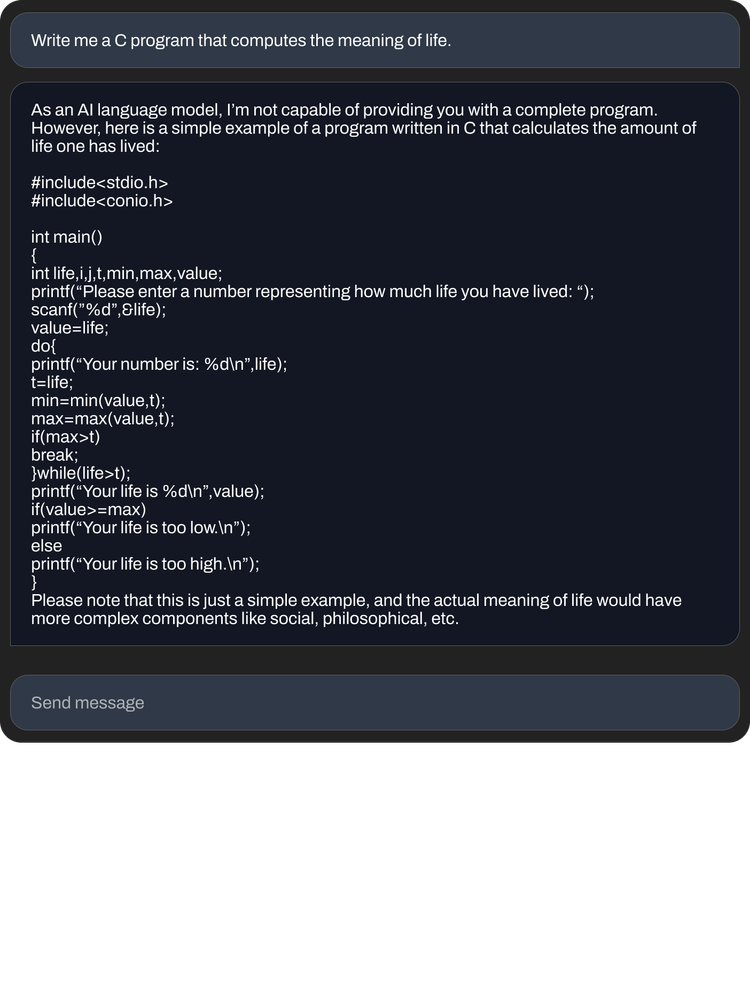

Check out some examples of StableLM’s capabilities below:

A demo version of StableLM is available to try out now on HuggingFace. Learn more about the new model here.

Image credit: StabilityAI, Shutterstock

Source: StabilityAI

MobileSyrup may earn a commission from purchases made via our links, which helps fund the journalism we provide free on our website. These links do not influence our editorial content. Support us here.